TL;DR: We propose a text-to-image model that precisely controls the amount and location of defocus blur in generated images while preserving the scene content.

Abstract

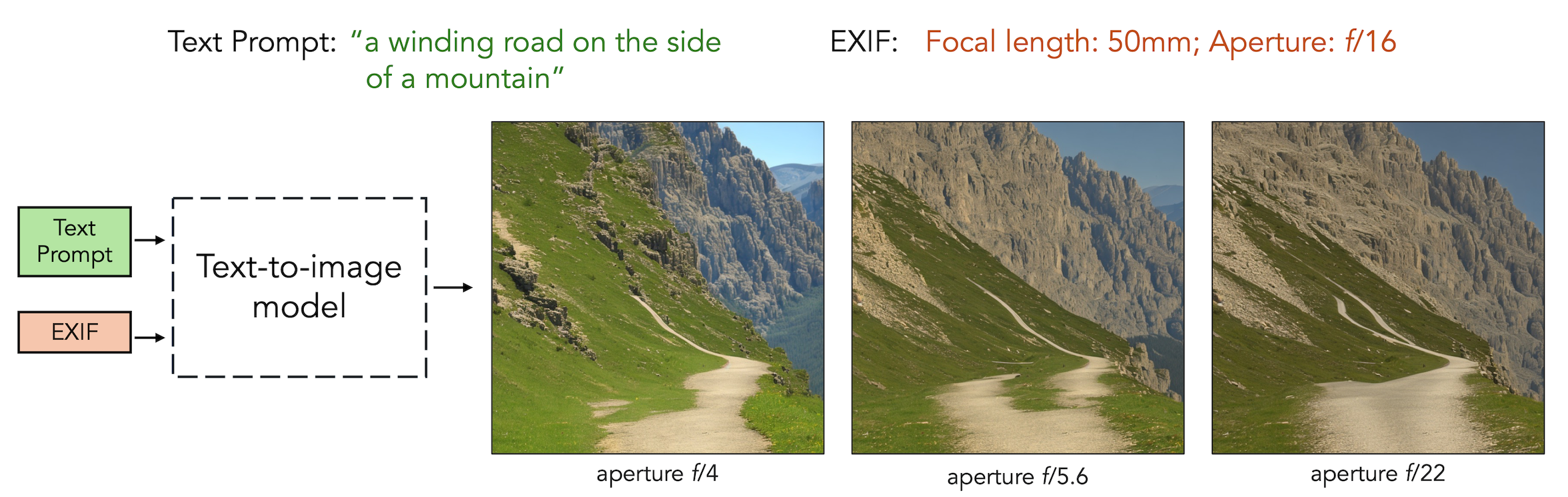

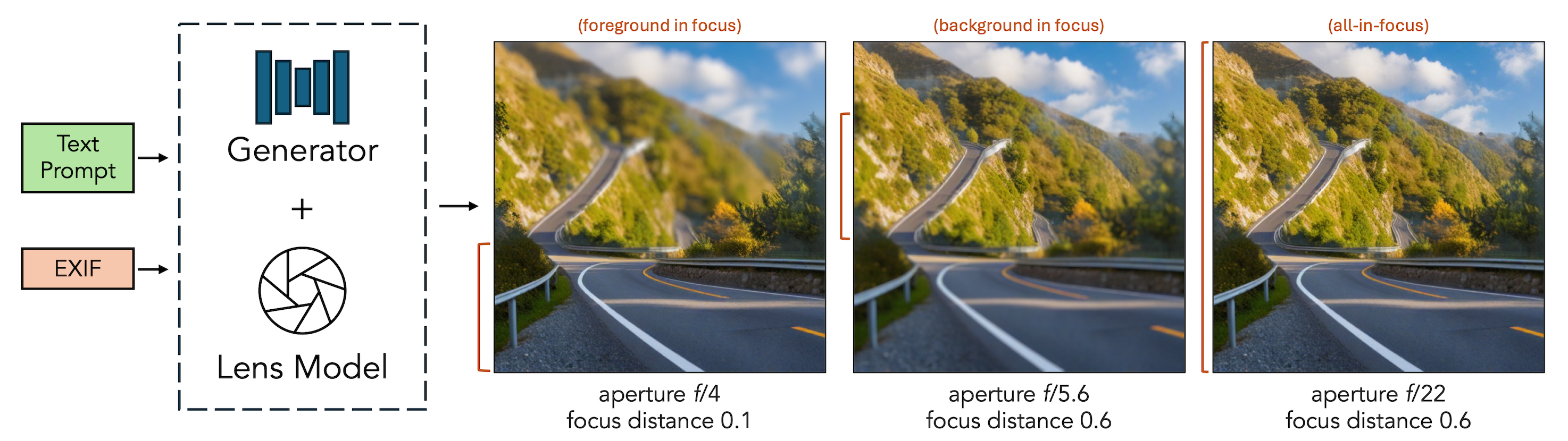

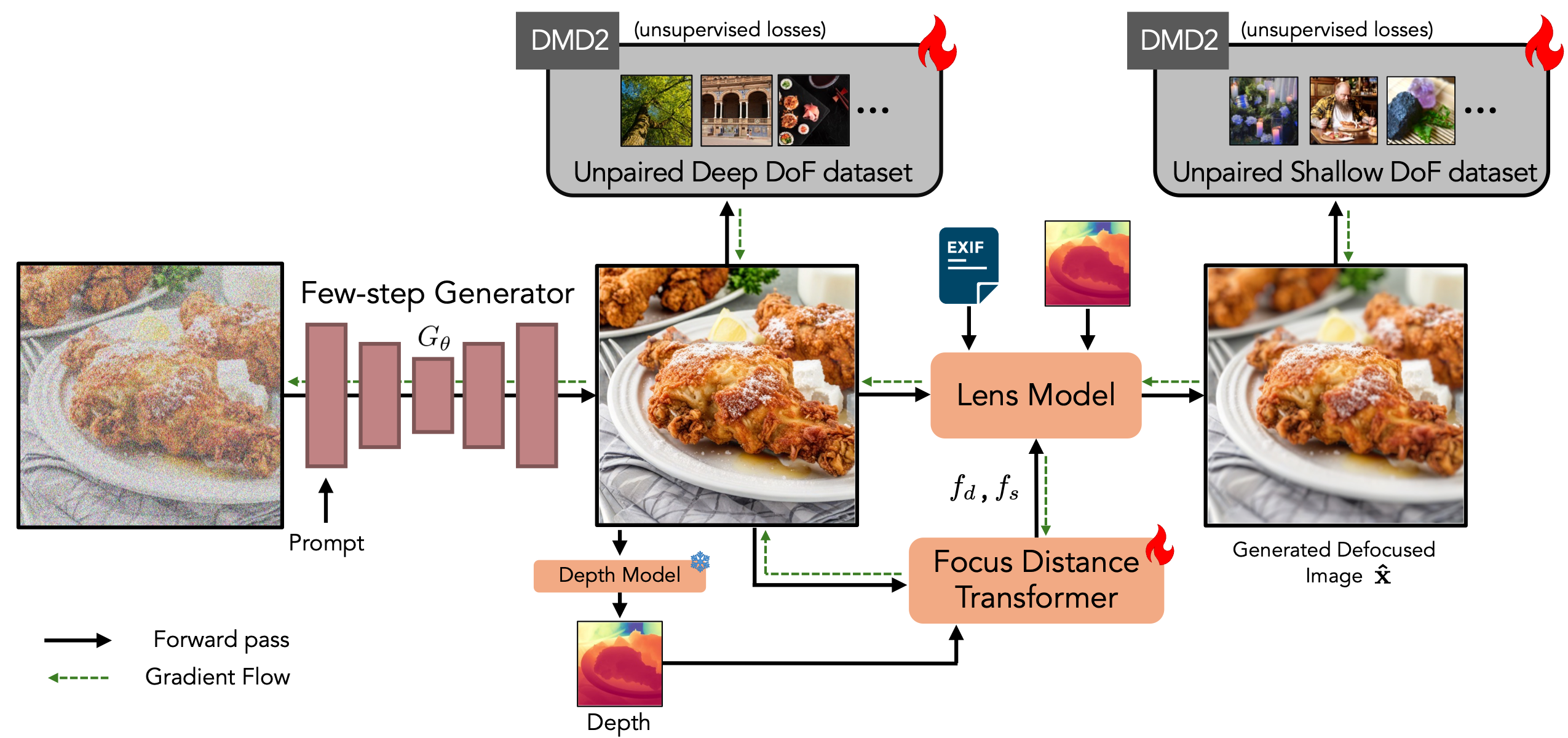

Current text-to-image diffusion models excel at generating diverse, high-quality images, yet they struggle to incorporate fine-grained camera metadata such as precise aperture settings. In this work, we introduce a novel text-to-image diffusion framework that leverages camera metadata, or EXIF data, which is often embedded in image files, with an emphasis on generating controllable lens blur. Our method mimics the physical image formation process by first generating an all-in-focus image, estimating its monocular depth, predicting a plausible focus distance with a novel focus distance transformer, and then forming a defocused image with an existing differentiable lens blur model2. Gradients flow backwards through this whole process, allowing us to learn without explicit supervision to generate defocus effects based on content elements and the provided EXIF data. At inference time, this enables precise interactive user control over defocus effects while preserving scene contents, which is not achievable with existing diffusion models. Experimental results demonstrate that our model enables superior fine-grained control without altering the depicted scene.

Our model obtains its supervision from a differentiable lens model and training examples of images with shallow and deep depth-of-field. We train our model to generate an all-in-focus image using $G_{\theta}$. A depth model then predicts depth for this image, which, along with the image itself, is fed into a model that estimates the focus distance $f_d$ and depth scale $f_s$. Finally, a lens model combines EXIF data with these predictions to apply spatially varying blur, generating the final image. We train the all-in-focus generator using unsupervised DMD23 losses on our unpaired Deep DoF dataset and optimize the entire pipeline with DMD2 losses on the unpaired Shallow DoF dataset.

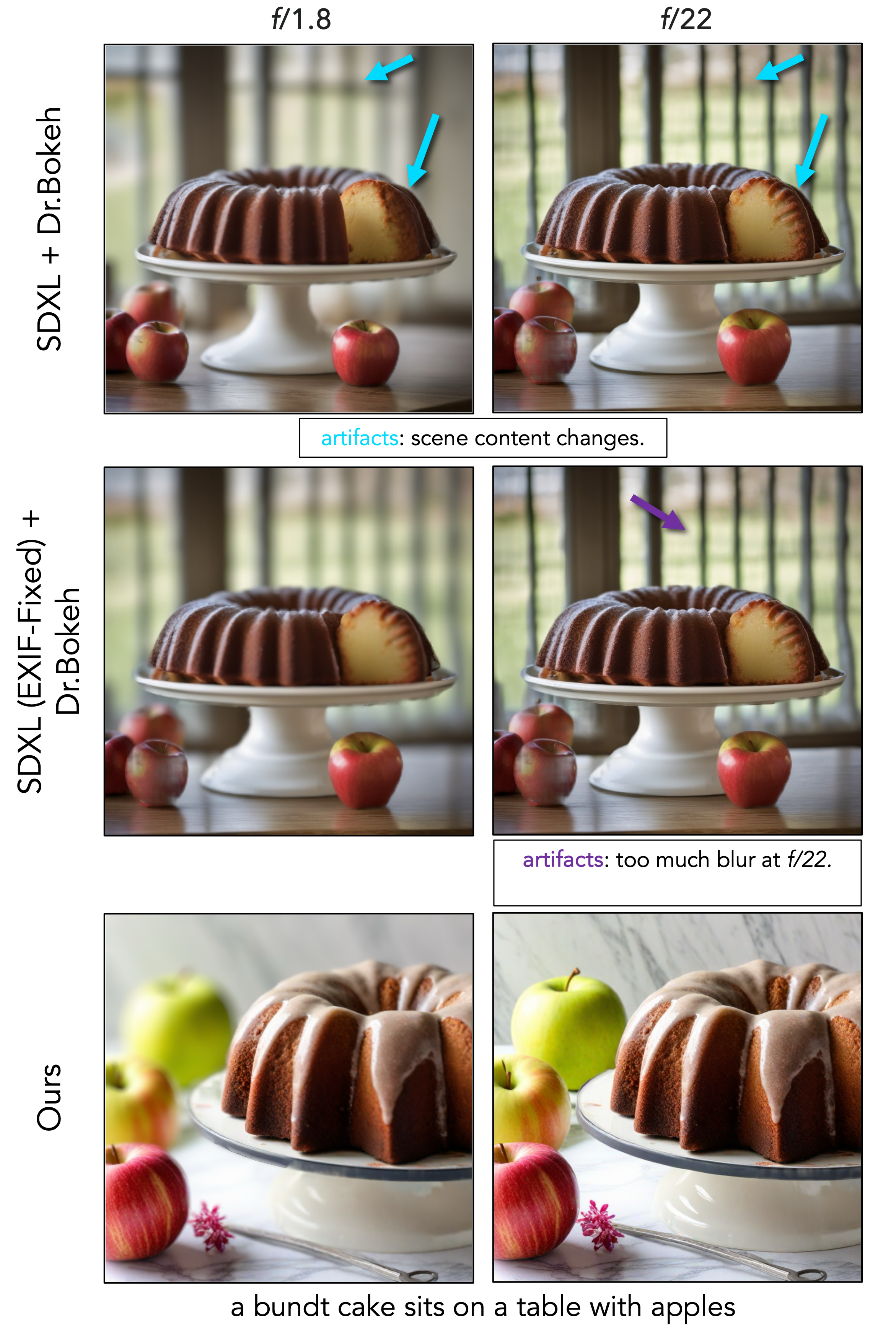

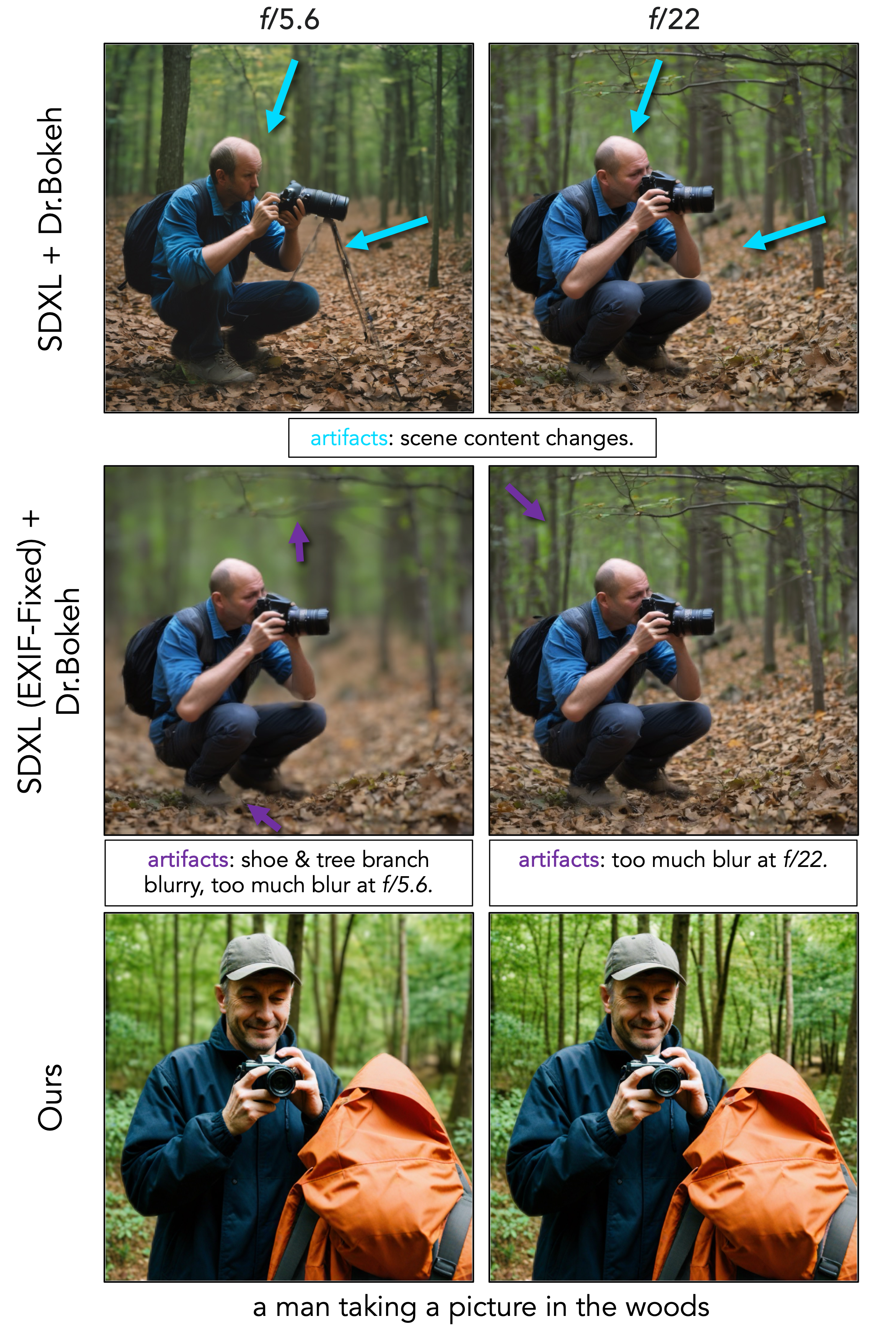

Results

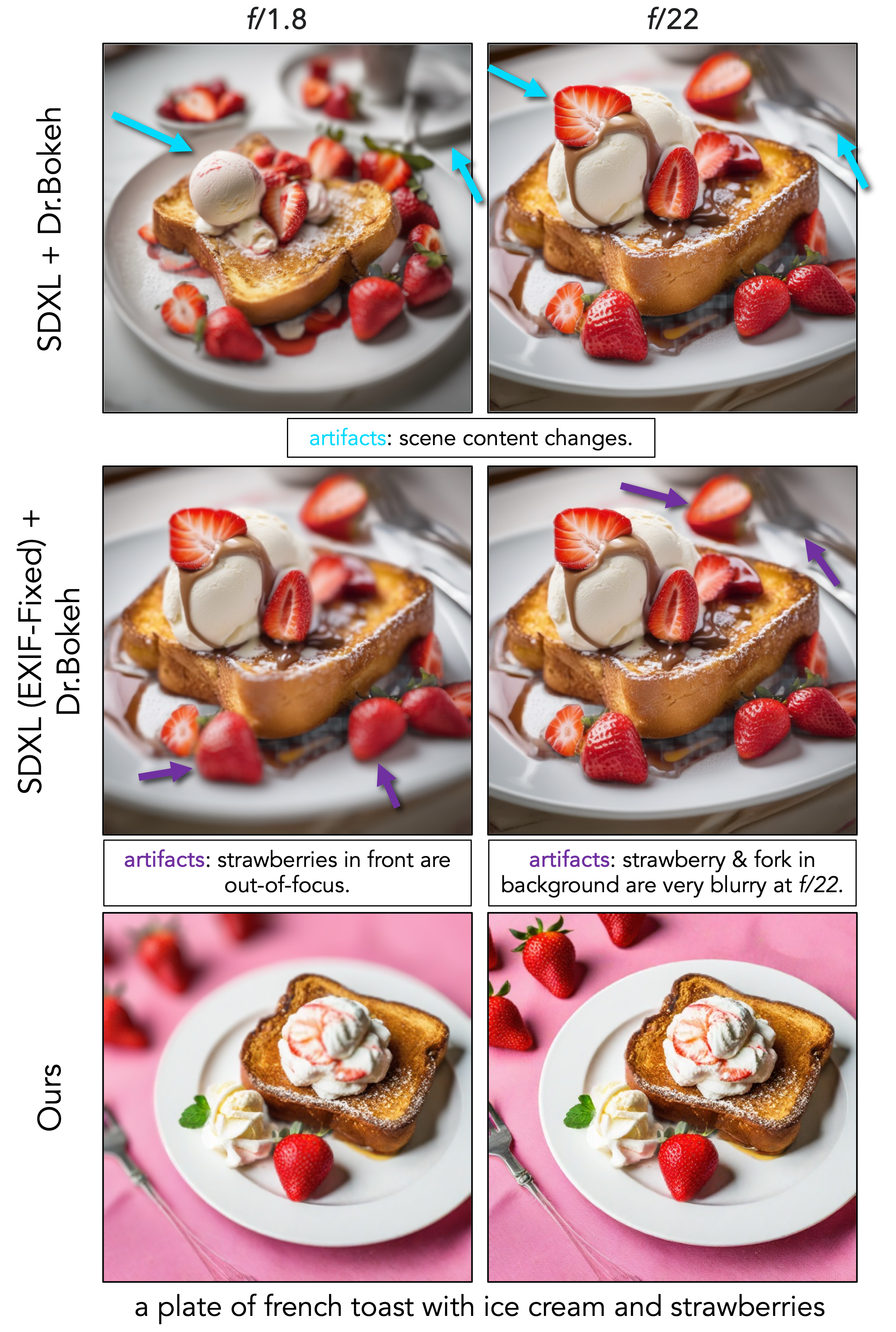

Comparisons with SDXL + Dr.Bokeh

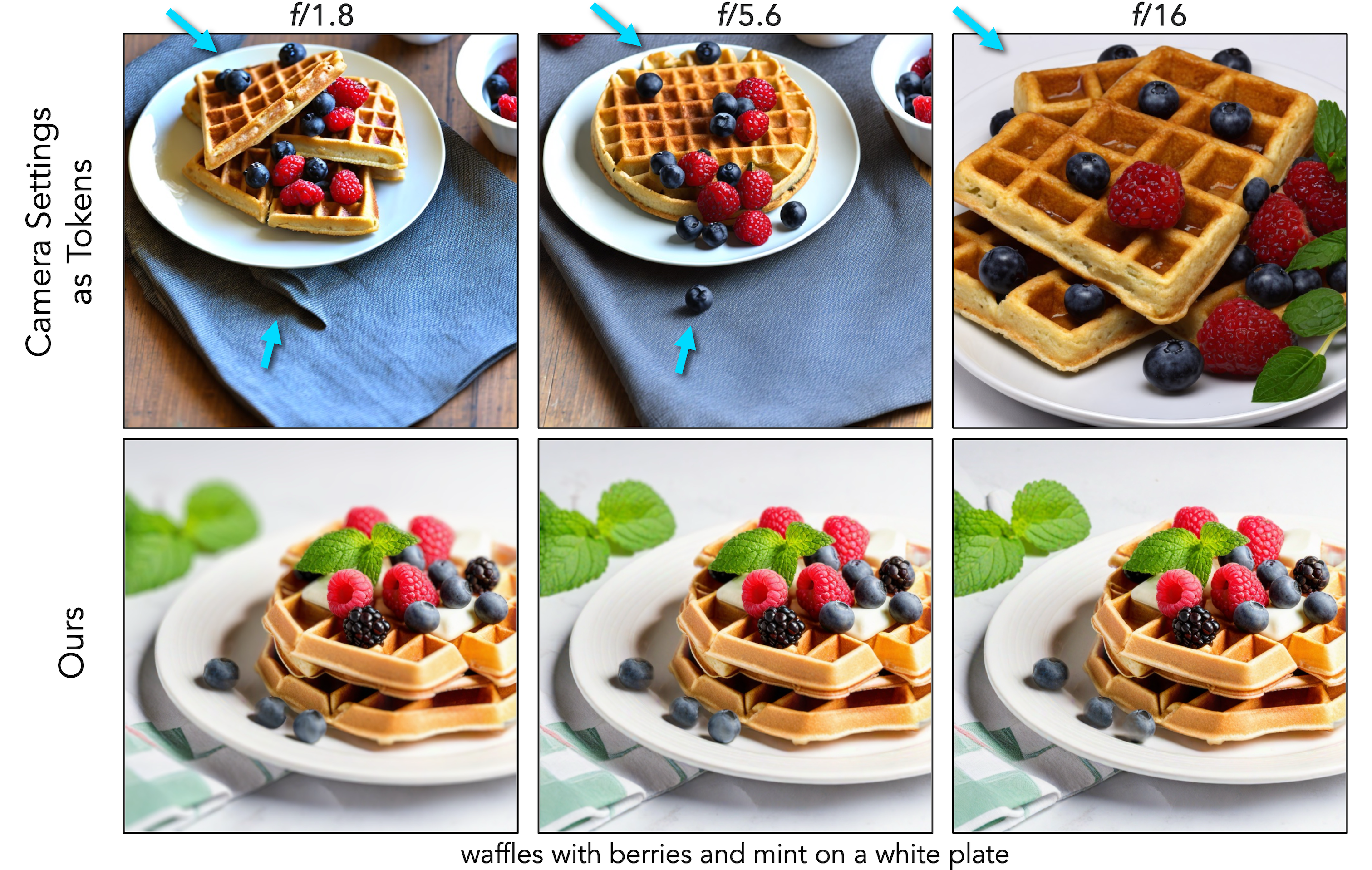

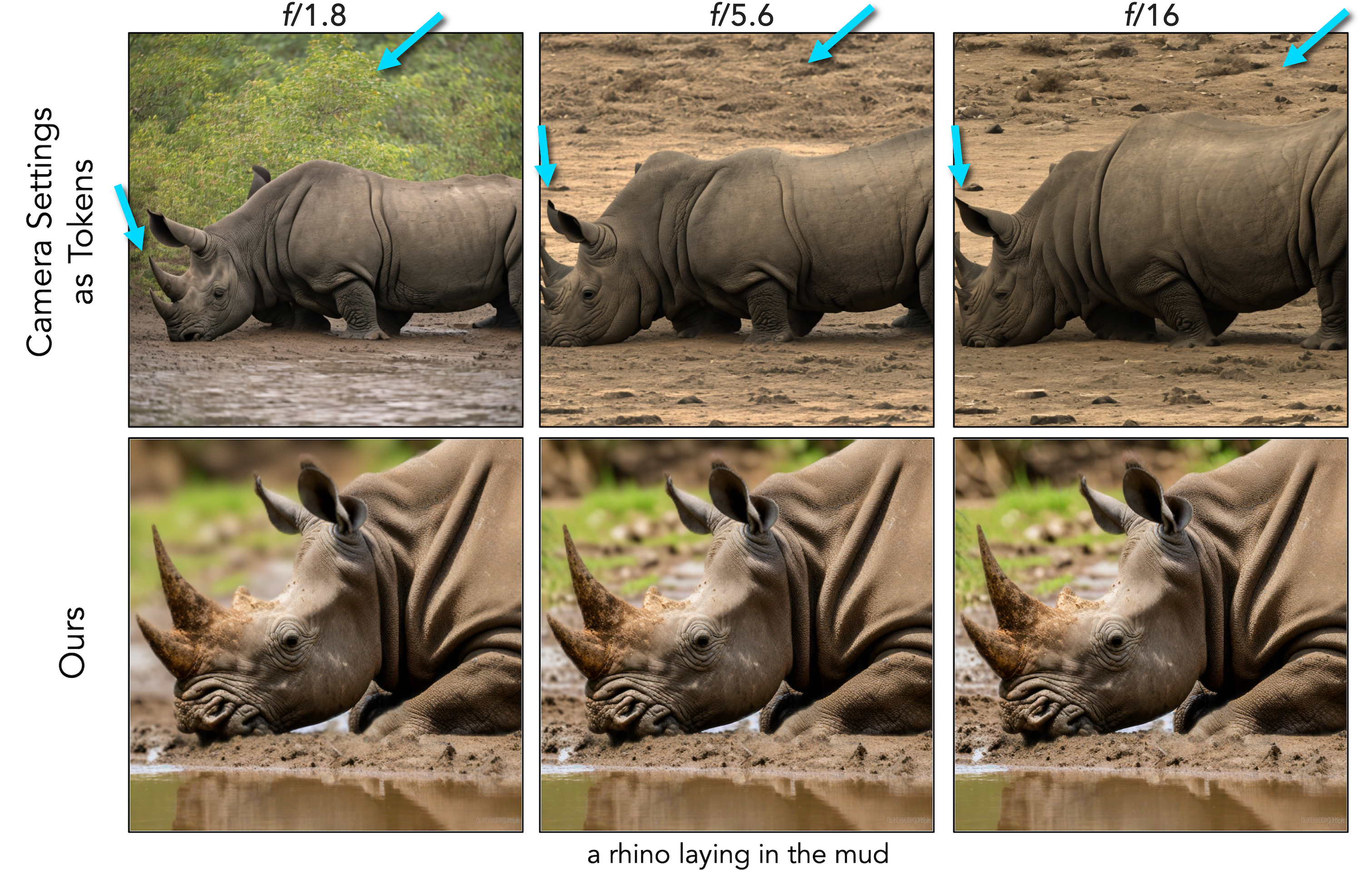

Comparisons with Camera Settings as Tokens

Comparison with EBB! Dataset

Drag the slider on the images to compare all-in-focus and defocused images.